komanawa.gw_detect_power#

created matt_dumont on: 6/07/23

Submodules#

- komanawa.gw_detect_power.base_detection_calculator

- komanawa.gw_detect_power.change_detection_counterfactual

- komanawa.gw_detect_power.change_detection_slope

- komanawa.gw_detect_power.create_lookup_tables

- komanawa.gw_detect_power.lookup_table_inits

- komanawa.gw_detect_power.timetest_slope

- komanawa.gw_detect_power.version

Classes#

This class is used to calculate the counterfactual detection power of a pair of auto created concentration time series. The user specifies an initial concentration, and a target concentration for both a base and alternative scenario. Other parameters include groundwater age distribution models and parameters, implementation time and the slope of the previous data. |

|

This class is used to calculate the slope detection power of an auto created concentration |

|

The DetectionPowerCalculator has been depreciated in version v2.0.0. To retain the old capability use v1.0.0. |

|

This class is used to calculate the counterfactual detection power of a pair of concentration time series The user specifies the true concentration time series for the base and alt scenarios and the noise level for both scenarios. The power is calculated by adding many noise realisations to the true concentration time series and running a paired t test or wilcoxon signed rank test to determine if the null hypothesis (The scenarios are the same) can be rejected. |

|

The DetectionPowerSlope class is used to calculate the power of a change detection test based on observing |

Package Contents#

- class AutoDetectionPowerCounterFactual(significance_mode, nsims=1000, p_value=0.05, min_samples=10, alternative='alt!=base', wx_zero_method='wilcox', wx_correction=False, wx_method='auto', ncores=None, log_level=logging.INFO, return_true_conc=False, return_noisy_conc_itters=0, only_significant_noisy=False)[source]#

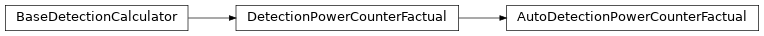

Bases:

DetectionPowerCounterFactual

This class is used to calculate the counterfactual detection power of a pair of auto created concentration time series. The user specifies an initial concentration, and a target concentration for both a base and alternative scenario. Other parameters include groundwater age distribution models and parameters, implementation time and the slope of the previous data.

The user then specifies the sampling duration, delay, and frequency. The power is calculated by adding many user specified noise realisations to both the base and alternative concentration time series and running a paired t test or wilcoxon signed rank test to determine if the null hypothesis (The scenarios are the same) can be rejected.

The Power is calculated as the percentage (0-100) of simulations which reject the null hypothesis.

- Parameters:

significance_mode –

str, one of:

’paired-t-test’: paired t test (parametric), scipy.stats.ttest_rel

’wilcoxon-signed-rank-test’: wilcoxon signed rank test (non-parametric), scipy.stats.wilcoxon

nsims – number of noise simulations to run for each change detection (e.g. nsims=1000, power= number of detected changes/1000 noise simulations)

p_value – minimum p value (see also alternative), if p >= p_value the null hypothesis will not be rejected (base and alt are the same) p < p_value the null hypothesis will be rejected (base and alt are different)

min_samples – minimum number of samples required, less than this number of samples will raise an exception

alternative –

str, one of:

’alt!=base’: two sided test (default),

’alt<base’: one sided test ~

’alt>base’

wx_zero_method –

str, one of:

“wilcox”: Discards all zero-differences (default); see [4].

“pratt”: Includes zero-differences in the ranking process, but drops the ranks of the zeros (more conservative); see [3]. In this case, the normal approximation is adjusted as in [5].

“zsplit”: Includes zero-differences in the ranking process and splits the zero rank between positive and negative ones.

for more info see: https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.wilcoxon.html

wx_correction – bool, If True, apply continuity correction by adjusting the Wilcoxon rank statistic by 0.5 towards the mean value when computing the z-statistic. Default is False.

wx_method – str, see scipy.stats.wilcoxon for more info

ncores – number of cores to use for multiprocessing, None will use all available cores

log_level – logging level for multiprocessing subprocesses

return_true_conc – return the true concentration time series for each simulation with power calcs (not supported with multiprocessing power calcs)

return_noisy_conc_itters – int <= nsims, default = 0 Number of noisy simulations to return if 0 then no noisy simulations are returned, not supported with multiprocessing power calcs

only_significant_noisy – bool if True then only return noisy simulations where a change was detected if there are fewer noisy simulations with changes detected than return_noisy_conc_itters all significant simulations will be returned. if there are no noisy simulations with changes detected then and empty dataframe is returned

- mulitprocess_power_calcs(outpath: {Path, None, str}, idv_vals: numpy.ndarray, error_base_vals: {np.ndarray, float}, samp_years_vals: {np.ndarray, int}, samp_per_year_vals: {np.ndarray, int}, implementation_time_alt_vals: {np.ndarray, int}, initial_conc_vals: {np.ndarray, float}, target_conc_alt_vals: {np.ndarray, float}, prev_slope_vals: {np.ndarray, float}, max_conc_lim_vals: {np.ndarray, float}, min_conc_lim_vals: {np.ndarray, float}, mrt_model_vals: {np.ndarray, str}, mrt_vals: {np.ndarray, float}, target_conc_base_vals: {np.ndarray, float, None} = None, implementation_time_base_vals: {np.ndarray, int, None} = None, error_alt_vals: {np.ndarray, float, None} = None, delay_years_vals: {np.ndarray, int, None} = None, mrt_p1_vals: {np.ndarray, float, None} = None, frac_p1_vals: {np.ndarray, float, None} = None, f_p1_vals: {np.ndarray, float, None} = None, f_p2_vals: {np.ndarray, float, None} = None, seed_alt_vals: {np.ndarray, int, None} = None, seed_base_vals: {np.ndarray, int, None} = None, run=True, debug_mode=False, **kwargs)[source]#

multiprocessing wrapper for power_calc, see power_calc for details

- Parameters:

outpath – path to save results to or None (no save)

idv_vals – id values for each simulation

error_base_vals – standard deviation of noise to add to the base time series for each simulation

samp_years_vals – sampling years for each simulation

samp_per_year_vals – sampling per year for each simulation

implementation_time_alt_vals – implementation time for the alternative scenario for each simulation

initial_conc_vals – initial concentration for each simulation

target_conc_alt_vals – target concentration for the alternative scenario for each simulation

prev_slope_vals – previous slope for each simulation

max_conc_lim_vals – maximum concentration limit for each simulation

min_conc_lim_vals – minimum concentration limit for the source for each simulation

mrt_model_vals – mrt model for each simulation

mrt_vals – mean residence time for each simulation

target_conc_base_vals – target concentration for the base scenario for each simulation, if None then target_conc_base = initial_conc

implementation_time_base_vals – implementation time for the base scenario for each simulation, if None then implementation_time_base = implementation_time_alt

error_alt_vals – standard deviation of the noise to add to the alt concentration time series, if None then error_alt = error_base

delay_years_vals – number of years to delay the start of the monitoring for each simulation, If the delay_years does not allow enough samples to be collected then an exception will be raised. If delay_years is 0 then the full length of the concentration time series will be used

mrt_p1_vals – mean residence time of the first piston flow model for each simulation Only used for binary_exponential_piston_flow model

frac_p1_vals – fraction of the first piston flow model for each simulation Only used for binary_exponential_piston_flow model

f_p1_vals – the exponential fraction of the first piston flow model for each simulation Only used for binary_exponential_piston_flow model

f_p2_vals – the exponential fraction of the second piston flow model for each simulation Only used for binary_exponential_piston_flow model

seed_alt_vals – random seed to generate the alternative noise for each simulation. One of: ndarray (integer seeds), int, None (no seeds passed, but will record the seed used)

seed_base_vals – random seed to generate the base noise for each simulation. One of: ndarray (integer seeds), int, None (no seeds passed, but will record the seed used)

Note seed_base != seed_alt (the same noise will be added to both time series, making the analysis useless)

- Parameters:

run – if True run the simulations, if False just build the run_dict and print the number of simulations

debug_mode – if True run as single process to allow for easier debugging

kwargs – other kwargs to pass directly to the output dataframe must be either a single value or an array of values with the same shape as id_vals

- Returns:

pd.DataFrame with the power calc results note power is percent 0-100

- plot_iteration(y0_base, y0_alt, true_conc_base, true_conc_alt, ax=None)[source]#

plot the concentration data itteration and the true concentration data

- Parameters:

y0_base – noisy concentration data for the base scenario

y0_alt – noisy concentration data for the alt scenario

true_conc_base – True concentration data for the base scenario

true_conc_alt – True concentration data for the alt scenario

ax – matplotlib axis to plot to, if None then a new figure and axis will be created

- Returns:

- power_calc(idv, error_base: float, mrt_model: str, samp_years: int, samp_per_year: int, implementation_time_alt: int, initial_conc: float, target_conc_alt: float, prev_slope: float, max_conc_lim: float, min_conc_lim: float, mrt: float = 0, target_conc_base: {float, None} = None, implementation_time_base: {int, None} = None, error_alt: {float, None} = None, delay_years: {int} = 0, mrt_p1: {float, None} = None, frac_p1: {float, None} = None, f_p1: {float, None} = None, f_p2: {float, None} = None, seed_base: {int, None} = None, seed_alt: {int, None} = None, testnitter=None, **kwargs)[source]#

calculate the counterfactual detection power of auto created concentration time series

- Parameters:

idv – identifiers for the power calc sites, passed straight through to the output

error_base – standard deviation of the noise to add to the base concentration time series

mrt_model –

the model to use for the mean residence time options:

’piston_flow’: use the piston flow model (no mixing, default)

’binary_exponential_piston_flow’: use the binary exponential piston flow model

For unitary exponential_piston_flow model set frac_1 = 1 and mrt_p1 = mrt

For no lag, set mrt=0, mrt_model=’piston_flow’

samp_years – number of years to sample

samp_per_year – number of samples to collect each year

implementation_time_alt – number of years over which the target concentration_alt is reached

initial_conc – initial median value of the concentration

target_conc_alt – target concentration for the alt scenario

prev_slope – slope of the previous data (e.g. prior to the initial concentration)

max_conc_lim – maximum concentration limit user specified or None (default)

min_conc_lim – minimum concentration limit for the source, only used for the binary_exponential_piston_flow model

mrt – the mean residence time of the site

target_conc_base – the target concentration for the base scenario, if None then target_conc_base = initial_conc

implementation_time_base – number of years over which the target concentration_base is reached, if None then implementation_time_base = implementation_time_alt

error_alt – standard deviation of the noise to add to the alt concentration time series, if None then error_alt = error_base

delay_years – number of years to delay the start of the monitoring, If the delay_years does not allow enough samples to be collected then an exception will be raised. If delay_years is 0 then the full length of the concentration time series will be used

Options for binary_exponential_piston_flow model:

- Parameters:

mrt_p1 – the mean residence time of the first piston flow model (only used for binary_exponential_piston_flow model)

frac_p1 – the fraction of the first piston flow model (only used for binary_exponential_piston_flow model)

f_p1 – the fraction of the first piston flow model (only used for binary_exponential_piston_flow model)

f_p2 – the fraction of the first piston flow model (only used for binary_exponential_piston_flow model)

seed_base – seed for the random number generator for the base scenario, if None then a random seed will be generated and returned with the output

seed_alt – seed for the random number generator for the alt scenario, if None then a random seed will be generated and returned with the output

testnitter – None (usually) or a different nitter then self.niter for testing run times

kwargs – any other kwargs to pass directly to the output Series

- Returns:

pd.Series with the power calc results note power is percent 0-100

Possible other dataframes if self.return_true_conc is True or self.return_noisy_conc_itters > 0 in which case a dictionary will be returned: {‘power’: power_df, # always ‘true_conc’: true_conc_ts, if self.return_true_conc is True ‘noisy_conc’ : noisy_conc_ts, if self.return_noisy_conc_itters > 0 }

- set_condensed_mode(target_conc_per=1, initial_conc_per=1, error_per=2, prev_slope_per=2, max_conc_lim_per=1, min_conc_lim_per=1, mrt_per=0, mrt_p1_per=2, frac_p1_per=2, f_p1_per=2, f_p2_per=2)[source]#

set calculator to condense the number of runs based by rounding the inputs to a specified precision

- Parameters:

target_conc_per – precision to round target_conc to (2 = 0.01)

initial_conc_per – precision to round initial_conc to (2 = 0.01)

error_per – precision to round error to (2 = 0.01)

prev_slope_per – precision to round previous_slope to (2 = 0.01)

max_conc_lim_per – precision to round max_conc_lim to (2 = 0.01)

min_conc_lim_per – precision to round min_conc_lim to (2 = 0.01)

mrt_per – precision to round mrt to

mrt_p1_per – precision to round mrt_p1 to

frac_p1_per – precision to round frac_p1 to

f_p1_per – precision to round f_p1 to

f_p2_per – precision to round f_p2 to

- Returns:

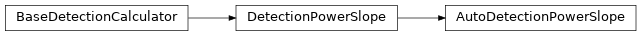

- class AutoDetectionPowerSlope(significance_mode='linear-regression', nsims=1000, min_p_value=0.05, min_samples=10, expect_slope='auto', efficent_mode=True, nparts=None, min_part_size=10, no_trend_alpha=0.5, mpmk_check_step=1, mpmk_efficent_min=10, mpmk_window=0.05, nsims_pettit=2000, ncores=None, log_level=logging.INFO, return_true_conc=False, return_noisy_conc_itters=0, only_significant_noisy=False, print_freq=None)[source]#

Bases:

DetectionPowerSlope

This class is used to calculate the slope detection power of an auto created concentration time series. The user specifies an initial concentration, a target concentration. Other parameters include groundwater age distribution models and parameters, implementation time and the slope of the previous data. The user then specifies the sampling duration, and frequency. The power is calculated by adding many noise realisations to the concentration data and then running one of multiple change detection tests on the noisy data.

The Power is calculated as the percentage (0-100) of simulations which detect a slope.

- Parameters:

significance_mode –

significance mode to use, options:

linear-regression: linear regression of the concentration data from time 0 to the end change detected if p < min_p_value

linear-regression-from-[max|min]: linear regression of the concentration data from the maximum concentration of the noise free concentration data to the end change detected if p < min_p_value

mann-kendall: mann-kendall test of the concentration data from time 0 to the end, change detected if p < min_p_value

mann-kendall-from-[max|min]: mann-kendall test of the concentration data from the maximum/minimum of the noisefree concentration data to the end, change detected if p < min_p_value

n-section-mann-kendall: 2+ part mann-kendall test to identify change points. if change points are detected then a change is detected

pettitt-test: pettitt test to identify change points. if change points are detected then a change is detected

nsims – number of noise simulations to run for each change detection (e.g. nsims=1000, power= number of detected changes/1000 noise simulations)

min_p_value – minimum p value to consider a change detected

min_samples – minimum number of samples required, less than this number of samples will raise an exception

expect_slope –

expected slope of the concentration data, use depends on significance mode:

linear-regression, linear-regression-from-max, mann-kendall, mann-kendall-from-max: one of 1 (increasing), -1 (decreasing), or ‘auto’ will match the slope of the concentration data before noise is added

n-section-mann-kendall: expected trend in each part of the time series (1 increasing, -1 decreasing, 0 no trend)

pettitt-test: not used.

efficent_mode –

bool, default = True, if True then

For linear regression and MannKendall based tests: run the test on the noise free data to see if any change can be detected, if no change is detected then the test will not be on the noisy data

For MultiPartMannKendall test: the test will be run on the noise free data to detect best change points and then the test will be run on the noisy data for a smaller window centered on the True change point see: “mpmk_efficent_min” and “mpmk_window”

For Pettitt Test: Not implemented, will be ignored and a waring passed

nparts – number of parts to use for the n-section-mann-kendall test (not used for other tests)

min_part_size – minimum number of samples in each part for the n-section-mann-kendall test (not used for other tests)

no_trend_alpha – alpha value to use for the no trend sections in the n-section-mann-kendall test trend less sections are only accepted if p > no_trend_alpha (not used for other tests)

mpmk_check_step – int or function, default = 1, number of samples to check for a change point in the MultiPartMannKendall test, used in both efficent_mode=True and efficent_mode=False if mpmk is a function it must take a single argument (n, number of samples) and return an integer check step

mpmk_efficent_min – int, default = 10, minimum number of possible change points to assess only used if efficent_mode = True The minimum number of breakpoints to test (mpmk_efficent_min) is always respected (i.e. if the window size is less than the minimum number of breakpoints to test, then the window size will be increased to the minimum number of breakpoints to test, but the space between breakpoints will still be defined by check_step). You can specify the exact number of breakpoints to check by setting mpmk_efficent_min=n breakpoints and setting mpmk_window=0

mpmk_window – float, default = 0.05, define the window around the true detected change point to run the MultiPartMannKendall. The detction window is defined as: (cp - mpmk_window*n, cp + mpmk_window*n) where cp is the detected change point and n is the number of samples in the time series Where both a mpmk_window and a check_step>1 is passed the mpmk_window will be used to define the window size and the check_step will be used to define the step size within the window.

nsims_pettit – number of simulations to run for calculating the pvalue of the pettitt test (not used for other tests)

ncores – number of cores to use for multiprocessing, None will use all available cores

log_level – logging level for multiprocessing subprocesses

return_true_conc – return the true concentration time series for each simulation with power calcs (not supported with multiprocessing power calcs)

return_noisy_conc_itters – int <= nsims, default = 0 Number of noisy simulations to return if 0 then no noisy simulations are returned, not supported with multiprocessing power calcs

only_significant_noisy – bool if True then only return noisy simulations where a change was detected if there are fewer noisy simulations with changes detected than return_noisy_conc_itters all significant simulations will be returned. if there are no noisy simulations with changes detected then and empty dataframe is returned

print_freq – None or int: if None then no progress will be printed, if int then progress will be printed every print_freq simulations (n%print_freq==0)

- mulitprocess_power_calcs(outpath: {Path, None, str}, idv_vals: numpy.ndarray, error_vals: {np.ndarray, float}, samp_years_vals: {np.ndarray, int}, samp_per_year_vals: {np.ndarray, int}, implementation_time_vals: {np.ndarray, int}, initial_conc_vals: {np.ndarray, float}, target_conc_vals: {np.ndarray, float}, prev_slope_vals: {np.ndarray, float}, max_conc_lim_vals: {np.ndarray, float}, min_conc_lim_vals: {np.ndarray, float}, mrt_model_vals: {np.ndarray, str}, mrt_vals: {np.ndarray, float}, mrt_p1_vals: {np.ndarray, float, None} = None, frac_p1_vals: {np.ndarray, float, None} = None, f_p1_vals: {np.ndarray, float, None} = None, f_p2_vals: {np.ndarray, float, None} = None, seed_vals: {np.ndarray, int, None} = None, run=True, debug_mode=False, **kwargs)[source]#

multiprocessing wrapper for power_calc, see power_calc for details

- Parameters:

outpath – a path to save the results to or None (no save), df is returned regardless

idv_vals – an array of identifiers for each simulation

error_vals – The standard deviation of the noise for each simulation

samp_years_vals – the number of years to sample

samp_per_year_vals – The number of samples to collect each year

implementation_time_vals – The number of years over which reductions are implemented

initial_conc_vals – The initial concentration for each simulation

target_conc_vals – target concentration for the simulation

prev_slope_vals – previous slope for each simulation

max_conc_lim_vals – maximum concentration limit for each simulation

min_conc_lim_vals – minimum concentration limit for the source for each simulation

mrt_model_vals – mrt model for each simulation

mrt_vals – mean residence time for each simulation

mrt_p1_vals – mean residence time of the first piston flow model for each simulation Only used for binary_exponential_piston_flow model

frac_p1_vals – fraction of the first piston flow model for each simulation Only used for binary_exponential_piston_flow model

f_p1_vals – the exponential fraction of the first piston flow model for each simulation Only used for binary_exponential_piston_flow model

f_p2_vals – the exponential fraction of the second piston flow model for each simulation Only used for binary_exponential_piston_flow model

seed –

the random seed for each simulation, one of the following:

None: no seed, random seed will be generated for each simulation (but it will be recorded in the output dataframe)

int: a single seed for all simulations

np.ndarray: an array of seeds, one for each simulation

run – if True run the simulations, if False just build the run_dict and print the number of simulations

debug_mode – if True run as single process to allow for easier debugging

kwargs – other kwargs to pass directly to the output dataframe must be either a single value or an array of values with the same shape as id_vals

- Returns:

dataframe with input data and the results of all of the power calcs. note power is percent 0-100

- plot_iteration(y0, true_conc, ax=None)[source]#

plot the concentration data itteration and the true concentration data if provided as well as the power test results and any predictions from the power test (e.g. the slope of the line used)

- Parameters:

y0 – noisy concentration data

true_conc – true concentration data

- Returns:

fig, ax

- power_calc(idv, error: float, mrt_model: str, samp_years: int, samp_per_year: int, implementation_time: int, initial_conc: float, target_conc: float, prev_slope: float, max_conc_lim: float, min_conc_lim: float, mrt: float = 0, mrt_p1: {float, None} = None, frac_p1: {float, None} = None, f_p1: {float, None} = None, f_p2: {float, None} = None, seed: {int, None} = None, testnitter=None, **kwargs)[source]#

calculate the detection power for a given set of parameters

- Parameters:

idv – identifiers for the power calc sites, passed straight through to the output

error – standard deviation of the noise

mrt_model –

the model to use for the mean residence time options:

’piston_flow’: use the piston flow model (no mixing, default)

’binary_exponential_piston_flow’: use the binary exponential piston flow model for unitary exponential_piston_flow model set frac_1 = 1 and mrt_p1 = mrt for no lag, set mrt=0, mrt_model=’piston_flow’

samp_years – number of years to sample

samp_per_year – number of samples to collect each year

implementation_time – number of years over which reductions are implemented

initial_conc – initial median value of the concentration

target_conc – target concentration to reduce to

prev_slope – slope of the previous data (e.g. prior to the initial concentration)

max_conc_lim – maximum concentration limit user specified or None (default)

min_conc_lim – minimum concentration limit for the source, only used for the binary_exponential_piston_flow model)

mrt – the mean residence time of the site

Options for binary_exponential_piston_flow model:

- Parameters:

mrt_p1 – the mean residence time of the first piston flow model (only used for binary_exponential_piston_flow model)

frac_p1 – the fraction of the first piston flow model (only used for binary_exponential_piston_flow model)

f_p1 – the fraction of the first piston flow model (only used for binary_exponential_piston_flow model)

f_p2 – the fraction of the first piston flow model (only used for binary_exponential_piston_flow model)

Model run options:

- Parameters:

seed – int or None for random seed

testnitter – None (usually) or a different nitter then self.niter for testing run times

kwargs – kwargs passed to the output series (e.g. region=’temp’ will yield a ‘region’ index with a value of ‘temp’)

- Returns:

pd.Seris with the power calc results note power is percent 0-100 Possible other dataframes if self.return_true_conc is True or self.return_noisy_conc_itters > 0 in which case a dictionary will be returned:

{‘power’: power_df, # always ‘true_conc’: true_conc_ts, if self.return_true_conc is True ‘noisy_conc’ : noisy_conc_ts, if self.return_noisy_conc_itters > 0 }

- set_condensed_mode(target_conc_per=1, initial_conc_per=1, error_per=2, prev_slope_per=2, max_conc_lim_per=1, min_conc_lim_per=1, mrt_per=0, mrt_p1_per=2, frac_p1_per=2, f_p1_per=2, f_p2_per=2)[source]#

set calculator to condense the number of runs based by rounding the inputs to a specified precision

- Parameters:

target_conc_per – precision to round target_conc to (2 = 0.01)

initial_conc_per – precision to round initial_conc to (2 = 0.01)

error_per – precision to round error to (2 = 0.01)

prev_slope_per – precision to round previous_slope to (2 = 0.01)

max_conc_lim_per – precision to round max_conc_lim to (2 = 0.01)

min_conc_lim_per – precision to round min_conc_lim to (2 = 0.01)

mrt_per – precision to round mrt to

mrt_p1_per – precision to round mrt_p1 to

frac_p1_per – precision to round frac_p1 to

f_p1_per – precision to round f_p1 to

f_p2_per – precision to round f_p2 to

- Returns:

- class DetectionPowerCalculator(*args, **kwargs)[source]#

The DetectionPowerCalculator has been depreciated in version v2.0.0. To retain the old capability use v1.0.0.

- Parameters:

args – dummy

kwargs – dummy

- class DetectionPowerCounterFactual(significance_mode, nsims=1000, p_value=0.05, min_samples=10, alternative='alt!=base', wx_zero_method='wilcox', wx_correction=False, wx_method='auto', ncores=None, log_level=logging.INFO, return_true_conc=False, return_noisy_conc_itters=0, only_significant_noisy=False)[source]#

Bases:

komanawa.gw_detect_power.base_detection_calculator.BaseDetectionCalculator

This class is used to calculate the counterfactual detection power of a pair of concentration time series The user specifies the true concentration time series for the base and alt scenarios and the noise level for both scenarios. The power is calculated by adding many noise realisations to the true concentration time series and running a paired t test or wilcoxon signed rank test to determine if the null hypothesis (The scenarios are the same) can be rejected.

The Power is calculated as the percentage (0-100) of simulations which reject the null hypothesis.

- Parameters:

significance_mode –

str, one of:

’paired-t-test’: paired t test (parametric), scipy.stats.ttest_rel

’wilcoxon-signed-rank-test’: wilcoxon signed rank test (non-parametric), scipy.stats.wilcoxon

nsims – number of noise simulations to run for each change detection (e.g. nsims=1000, power= number of detected changes/1000 noise simulations)

p_value –

minimum p value (see also alternative), if:

p >= p_value the null hypothesis will not be rejected (base and alt are the same)

p < p_value the null hypothesis will be rejected (base and alt are different)

min_samples – minimum number of samples required, less than this number of samples will raise an exception

alternative –

str, one of:

’alt!=base’: two sided test (default),

’alt<base’: one sided test ~

’alt>base’

wx_zero_method –

str, one of:

“wilcox”: Discards all zero-differences (default); see [4].

“pratt”: Includes zero-differences in the ranking process, but drops the ranks of the zeros (more conservative); see [3]. In this case, the normal approximation is adjusted as in [5].

“zsplit”: Includes zero-differences in the ranking process and splits the zero rank between positive and negative ones.

for more info see: https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.wilcoxon.html

wx_correction – bool, If True, apply continuity correction by adjusting the Wilcoxon rank statistic by 0.5 towards the mean value when computing the z-statistic. Default is False.

wx_method – str, see scipy.stats.wilcoxon for more info

ncores – number of cores to use for multiprocessing, None will use all available cores

log_level – logging level for multiprocessing subprocesses

return_true_conc – return the true concentration time series for each simulation with power calcs (not supported with multiprocessing power calcs)

return_noisy_conc_itters – int <= nsims, default = 0 Number of noisy simulations to return if 0 then no noisy simulations are returned, not supported with multiprocessing power calcs

only_significant_noisy – bool if True then only return noisy simulations where a change was detected if there are fewer noisy simulations with changes detected than return_noisy_conc_itters all significant simulations will be returned. if there are no noisy simulations with changes detected then and empty dataframe is returned

- mulitprocess_power_calcs(outpath: {Path, None, str}, idv_vals: numpy.ndarray, true_conc_base_vals: {np.ndarray, list}, true_conc_alt_vals: {np.ndarray, list}, error_base_vals: {np.ndarray, None, float}, error_alt_vals: {np.ndarray, None, float} = None, seed_alt_vals_vals: {np.ndarray, int, None} = None, seed_base_vals_vals: {np.ndarray, int, None} = None, run=True, debug_mode=False, **kwargs)[source]#

multiprocessing wrapper for power_calc, see power_calc for details note that if a given run raises and exception the traceback for the exception will be included in the returned dataset under the column ‘python_error’ if ‘python_error’ is None then the run was successful to change the number of cores used pass n_cores to the constructor init

- Parameters:

outpath – path to save results to or None (no save)

idv_vals – id values for each simulation

All values from here on out should be either a single value or an array of values with the same shape as id_vals

- Parameters:

true_conc_base_vals – time series concentration dta for the ‘base’ scenario. Note sampling frequency is assumed to be correct.

true_conc_alt_vals – time series concentration dta for the ‘alt’ scenario. Note sampling frequency is assumed to be correct.

error_base_vals – standard deviation of noise to add to the base time series for each simulation

error_alt_vals – standard deviation of noise to add to the alt time series for each simulation

seed_alt_vals_vals –

random seed to generate the alternative noise. One of:

ndarray (integer seeds),

None (no seeds passed, but will record the seed used)

int (1 seed for all simulations)

seed_base_vals_vals –

random seed to generate the base noise. One of:

ndarray (integer seeds),

None (no seeds passed, but will record the seed used)

int (1 seed for all simulations)

Note seed_base != seed_alt (the same noise will be added to both time series, making the analysis useless) :param run: if True run the simulations, if False just build the run_dict and print the number of simulations :param debug_mode: if True run as single process to allow for easier debugging :param kwargs: any other kwargs to pass directly to the output dataframe :return: dataframe with input data and the results of all of the power calcs. note power is percent 0-100

- plot_iteration(y0_base, y0_alt, true_conc_base, true_conc_alt, ax=None)[source]#

plot the concentration data itteration and the true concentration data

- Parameters:

y0_base – noisy concentration data for the base scenario

y0_alt – noisy concentration data for the alt scenario

true_conc_base – True concentration data for the base scenario

true_conc_alt – True concentration data for the alt scenario

ax – matplotlib axis to plot to, if None then a new figure and axis will be created

- Returns:

- power_calc(idv, error_base: float, true_conc_base: numpy.ndarray, true_conc_alt: numpy.ndarray, error_alt: {float, None} = None, seed_base: {int, None} = None, seed_alt: {int, None} = None, testnitter=None, **kwargs)[source]#

calculate the counterfactual detection power of a pair of concentration time series note the power is calculated using the sampling frequency of the true_conc_base/alt, if you want to test the power at a different sampling frequency then you should resample the true_conc_base/alt before passing it to this function

- Parameters:

idv – identifiers for the power calc sites, passed straight through to the output

error_base – standard deviation of the noise to add to the base concentration time series

true_conc_base – the true concentration timeseries for the base scenario

true_conc_alt – the true concentration timeseries for the alt scenario

error_alt – standard deviation of the noise to add to the alt concentration time series, if None then error_alt = error_base

seed_base – seed for the random number generator for the base scenario, if None then a random seed will be generated and returned with the output

seed_alt – seed for the random number generator for the alt scenario, if None then a random seed will be generated and returned with the output

testnitter – None (usually) or a different nitter then self.niter for testing run times

kwargs – any other kwargs to pass directly to the output Series

- Returns:

pd.Series with the power calc results note power is percent 0-100

Possible other dataframes if self.return_true_conc is True or self.return_noisy_conc_itters > 0 in which case a dictionary will be returned: {‘power’: power_df, # always ‘true_conc’: true_conc_ts, if self.return_true_conc is True ‘noisy_conc’ : noisy_conc_ts, if self.return_noisy_conc_itters > 0 }

- class DetectionPowerSlope(significance_mode='linear-regression', nsims=1000, min_p_value=0.05, min_samples=10, expect_slope='auto', efficent_mode=True, nparts=None, min_part_size=10, no_trend_alpha=0.5, mpmk_check_step=1, mpmk_efficent_min=10, mpmk_window=0.05, nsims_pettit=2000, ncores=None, log_level=logging.INFO, return_true_conc=False, return_noisy_conc_itters=0, only_significant_noisy=False, print_freq=None)[source]#

Bases:

komanawa.gw_detect_power.base_detection_calculator.BaseDetectionCalculator

The DetectionPowerSlope class is used to calculate the power of a change detection test based on observing a slope in the concentration data. The user passes a True concentration time series and the power is calculated by adding many noise realisations to the concentration data and then running one of multiple change detection tests on the noisy data.

The Power is calculated as the percentage (0-100) of simulations which detect a slope.

- Parameters:

significance_mode –

significance mode to use, options:

linear-regression: linear regression of the concentration data from time 0 to the end change detected if p < min_p_value

linear-regression-from-[max|min]: linear regression of the concentration data from the maximum concentration of the noise free concentration data to the end change detected if p < min_p_value

mann-kendall: mann-kendall test of the concentration data from time 0 to the end, change detected if p < min_p_value

mann-kendall-from-[max|min]: mann-kendall test of the concentration data from the maximum/minimum of the noise free concentration data to the end, change detected if p < min_p_value

n-section-mann-kendall: 2+ part mann-kendall test to identify change points. if change points are detected then a change is detected

pettitt-test: pettitt test to identify change points. if change points are detected then a change is detected

nsims – number of noise simulations to run for each change detection (e.g. nsims=1000, power= number of detected changes/1000 noise simulations)

min_p_value – minimum p value to consider a change detected

min_samples – minimum number of samples required, less than this number of samples will raise an exception

expect_slope –

expected slope of the concentration data, use depends on significance mode:

linear-regression, linear-regression-from-max, mann-kendall, or mann-kendall-from-max: * one of 1 (increasing), -1 (decreasing), or ‘auto’ will match the slope of the concentration data before noise is added

n-section-mann-kendall: expected trend in each part of the time series (1 increasing, -1 decreasing, 0 no trend)

pettitt-test: not used.

efficent_mode –

bool, default = True, if True then

For linear regression and MannKendall based tests: run the test on the noise free data to see if any change can be detected, if no change is detected then the test will not be on the noisy data

For MultiPartMannKendall test: the test will be run on the noise free data to detect best change points and then the test will be run on the noisy data for a smaller window centered on the True change point see: * mpmk_efficent_min, * mpmk_window

For Pettitt Test: Not implemented, will be ignored and a waring passed

nparts – number of parts to use for the n-section-mann-kendall test (not used for other tests)

min_part_size – minimum number of samples in each part for the n-section-mann-kendall test (not used for other tests)

no_trend_alpha – alpha value to use for the no trend sections in the n-section-mann-kendall test trendless sections are only accepted if p > no_trend_alpha (not used for other tests)

mpmk_check_step – int or function, default = 1, number of samples to check for a change point in the MultiPartMannKendall test, used in both efficent_mode=True and efficent_mode=False if mpmk is a function it must take a single argument (n, number of samples) and return an integer check step

mpmk_efficent_min – int, default = 10, minimum number of possible change points to assess only used if efficent_mode = True The minimum number of breakpoints to test (mpmk_efficent_min) is always respected (i.e. if the window size is less than the minimum number of breakpoints to test, then the window size will be increased to the minimum number of breakpoints to test, but the space between breakpoints will still be defined by check_step). You can specify the exact number of breakpoints to check by setting mpmk_efficent_min=n breakpoints and setting mpmk_window=0

mpmk_window – float, default = 0.05, define the window around the true detected change point to run the MultiPartMannKendall. The detction window is defined as: (cp - mpmk_window*n, cp + mpmk_window*n) where cp is the detected change point and n is the number of samples in the time series Whe`re both a mpmk_window and a check_step>1 is passed the mpmk_window will be used to de`fine the window size and the check_step will be used to define the step size within the window.`

nsims_pettit – number of simulations to run for calc`ulating the pvalue of the pettitt test (not used for other tests)

ncores – number of cores to use for multiprocessing, None will use all available cores

log_level – logging level for multiprocessing subprocesses

return_true_conc – return the true concentration time series for each simulation with power calcs (not supported with multiprocessing power calcs)

return_noisy_conc_itters – int <= nsims, default = 0 Number of noisy simulations to return. if 0 then no noisy simulations are returned, not supported with multiprocessing power calcs

only_significant_noisy – bool if True then only return noisy simulations where a change was detected if there are fewer noisy simulations with changes detected than return_noisy_conc_itters all significant simulations will be returned. if there are no noisy simulations with changes detected then and empty dataframe is returned

print_freq – None or int: if None then no progress will be printed, if int then progress will be printed every print_freq simulations (n%print_freq==0)

- mulitprocess_power_calcs(outpath: {Path, None, str}, idv_vals: numpy.ndarray, error_vals: {np.ndarray, float}, true_conc_ts_vals: {np.ndarray, list}, seed_vals: {np.ndarray, int, None} = None, run=True, debug_mode=False, **kwargs)[source]#

multiprocessing wrapper for power_calc, see power_calc for details note that if a given run raises and exception the traceback for the exception will be included in the returned dataset under the column ‘python_error’ if ‘python_error’ is None then the run was successful to change the number of cores used pass n_cores to the constructor init

- Parameters:

outpath – path to save results to or None (no save)

idv_vals – id values for each simulation

All values from here on out should be either a single value or an array of values with the same shape as id_vals

- Parameters:

error_vals – standard deviation of noise to add for each simulation

true_conc_ts_vals – the true concentration time series for each simulation, note that this can be a list of arrays of different lengths for each simulation, as Numpy does not support jagged arrays

seed – ndarray (integer seeds), None (no seeds), or int (1 seed for all simulations)

run – if True run the simulations, if False just build the run_dict and print the number of simulations

debug_mode – if True run as single process to allow for easier debugging

kwargs – any other kwargs to pass directly to the output dataframe

- Returns:

dataframe with input data and the results of all of the power calcs. note power is percent 0-100

- plot_iteration(y0, true_conc, ax=None)[source]#

plot the concentration data itteration and the true concentration data if provided as well as the power test results and any predictions from the power test (e.g. the slope of the line used)

- Parameters:

y0 – noisy concentration data

true_conc – true concentration data

- Returns:

fig, ax

- power_calc(idv, error: float, true_conc_ts: numpy.ndarray, seed: {int, None} = None, testnitter=None, **kwargs)[source]#

calculate the slope detection power of a given concentration time series, note the power is calculated using the sampling frequency of the true_conc_ts, if you want to test the power at a different sampling frequency then you should resample the true_conc_ts before passing it to this function

- Parameters:

idv – identifiers for the power calc sites, passed straight through to the output

error – standard deviation of the noise

true_conc_ts – the true concentration timeseries for the power calc

seed – int or None for random seed

testnitter – None (usually) or a different nitter then self.niter for testing run times

kwargs – any other kwargs to pass directly to the output Series

- Returns:

pd.Series with the power calc results note power is percent 0-100

Possible other dataframes if self.return_true_conc is True or self.return_noisy_conc_itters > 0 in which case a dictionary will be returned:

{‘power’: power_df, # always ‘true_conc’: true_conc_ts, if self.return_true_conc is True ‘noisy_conc’ : noisy_conc_ts, if self.return_noisy_conc_itters > 0 }